Very recently neural implicit rendering techniques have been rapidly evolved and shown great advantages in novel view synthesis and 3D scene reconstruction. However, existing neural rendering methods for editing purposes offer limited functionality, e.g., rigid transformation, or not applicable for fine-grained editing for general objects from daily lives. In this paper, we present a novel mesh-based representation by encoding the neural implicit field with disentangled geometry and texture codes on mesh vertices, which facilitates a set of editing functionalities, including mesh-guided geometry editing, designated texture editing with texture swapping, filling and painting operations. To this end, we develop several techniques including learnable sign indicators to magnify spatial distinguishability of mesh-based representation, distillation and fine-tuning mechanism to make a steady convergence, and the spatial-aware optimization strategy to realize precise texture editing. Extensive experiments and editing examples on both real and synthetic data demonstrate the superiority of our method on representation quality and editing ability.

Framework Overview

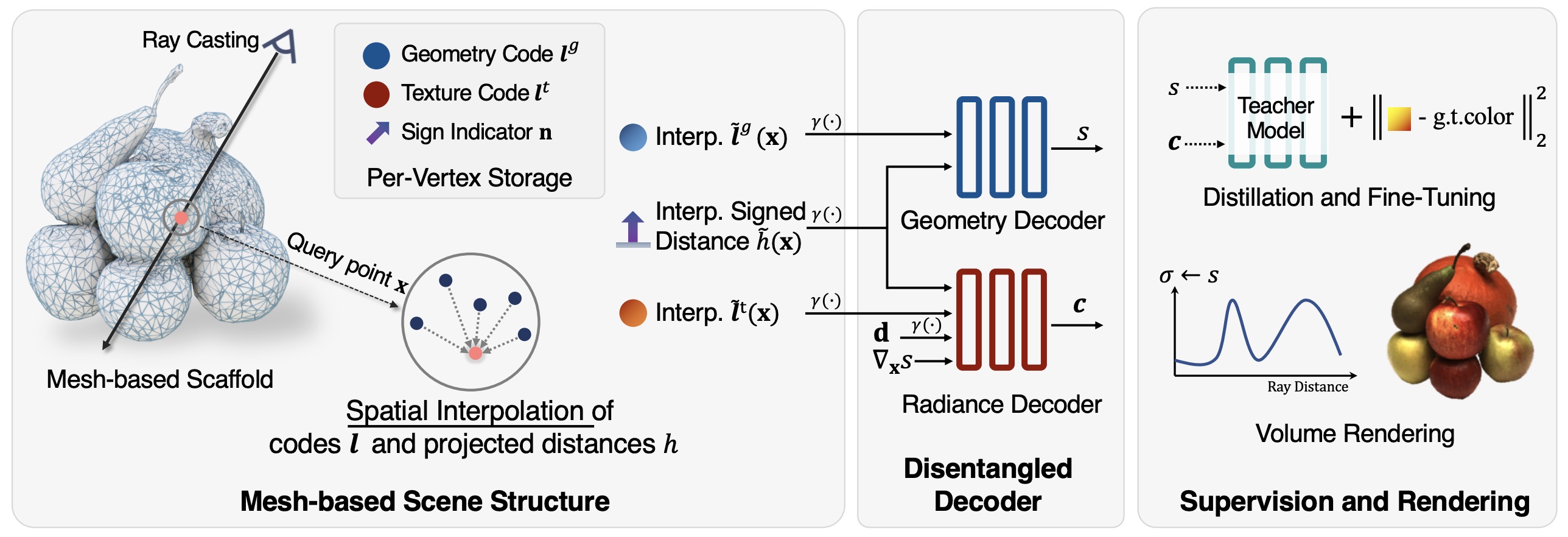

We present a novel representation for neural rendering, which encodes neural implicit field on a mesh-based scaffold. Each mesh vertex possesses a geometry and texture code ${\boldsymbol{l}}^{g}, {\boldsymbol{l}}^{t}$, and a sign indicator $\textbf{n}$ for computing projected distance $h$. For a query point $\boldsymbol{x}$ along a casted camera ray, we retrieve interpolated codes and signed distances from the nearby mesh vertices, and forward to the geometry and radiance decoder to obtain SDF value $s$ and color $\textbf{c}$.

Our Functionalities

Our representation support a series of editing functionalities, including a mesh-guided geometry editing, designated texture editing with texture swapping of two objects, texture filling with materials from pre-captured objects, and texture painting by transferring user-paints from 2D image to 3D field.

Geometry Editing

Since the neural implicit field has been tightly aligned to the mesh surface, we simply deform the corresponding mesh, and the change will synchronously take effect on the implicit field and the rendered object.

Texture Swapping

We can transfer the texture from the red area to the yellow area according to user-selected vertices. As shown above, our method successfully exchanges textures while preserving rich details.

Texture Filling

We can fill the object textures with texture templates from several pre-captured NeuMesh models. The edited objects exhibit the texture from the templates, and also show rich view-dependent effects, such as shiny plate and golden metals.

Texture Painting

We can transfer painting from a single 2D image to the neural implicit field, and allows free preview of painted objects in rendered novel views.

@inproceedings{neumesh,

title={NeuMesh: Learning Disentangled Neural Mesh-based Implicit Field for Geometry and Texture Editing},

author={{Chong Bao and Bangbang Yang} and Zeng Junyi and Bao Hujun and Zhang Yinda and Cui Zhaopeng and Zhang Guofeng},

booktitle={European Conference on Computer Vision (ECCV)},

year={2022}

}@inproceedings{neumesh,

title={NeuMesh: Learning Disentangled Neural Mesh-based Implicit Field for Geometry and Texture Editing},

author={{Bao and Yang} and Zeng Junyi and Bao Hujun and Zhang Yinda and Cui Zhaopeng and Zhang Guofeng},

booktitle={European Conference on Computer Vision (ECCV)},

year={2022}

}