Despite the great success in 2D editing using user-friendly tools, such as Photoshop, semantic strokes, or even text prompts, similar capabilities in 3D areas are still limited, either relying on 3D modeling skills or allowing editing within only a few categories. In this paper, we present a novel semantic-driven NeRF editing approach, which enables users to edit a neural radiance field with a single image, and faithfully delivers edited novel views with high fidelity and multi-view consistency. To achieve this goal, we propose a prior-guided editing field to encode fine-grained geometric and texture editing in 3D space, and develop a series of techniques to aid the editing process, including cyclic constraints with a proxy mesh to facilitate geometric supervision, a color compositing mechanism to stabilize semantic-driven texture editing, and a feature-cluster-based regularization to preserve the irrelevant content unchanged. Extensive experiments and editing examples on both real-world and synthetic data demonstrate that our method achieves photo-realistic 3D editing using only a single edited image, pushing the bound of semantic-driven editing in 3D real-world scenes.

Framework Overview

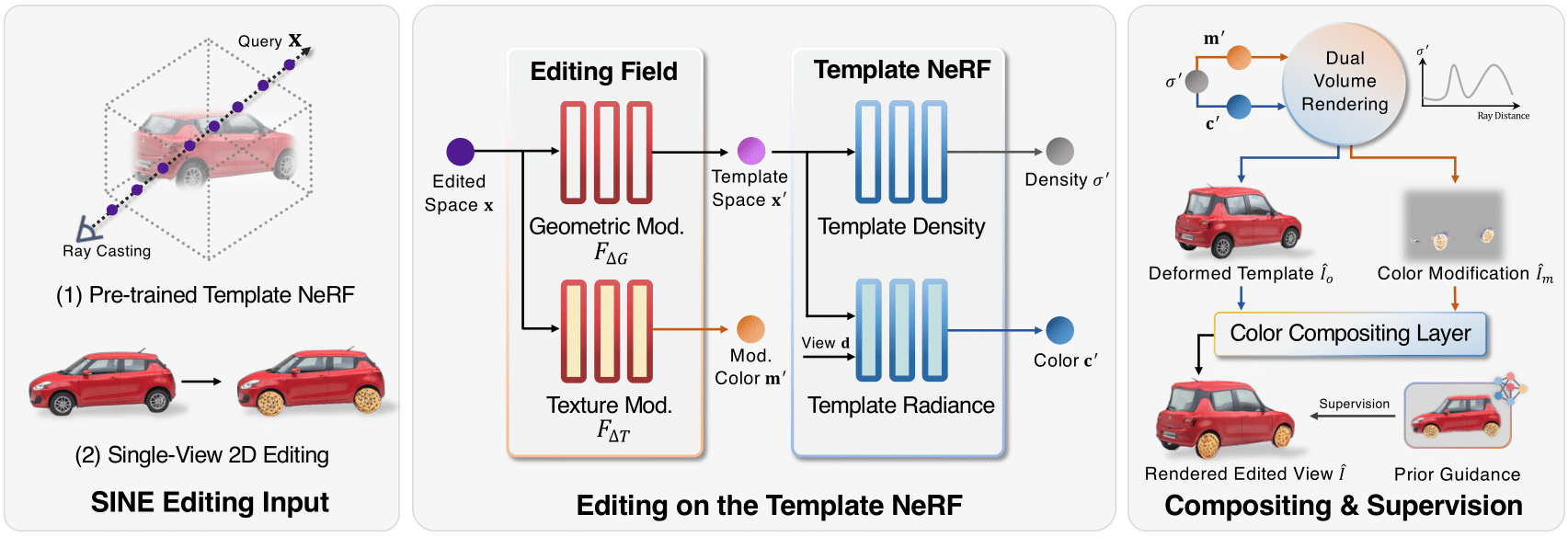

We encode geometric and texture changes over the original template NeRF with a prior-guided editing field, where the geometric modification field $F_{\Delta G}$ transformed the edited space query $\mathbf{x}$ into the template space $\mathbf{x}$, and the texture modification field $F_{\Delta T}$ encodes modification colors $\mathbf{m}'$. Then, we render deformed template image $\hat{I}_{o}$ and color modification image $\hat{I}_{m}$ with all the queries, and use a color compositing layer to blend $\hat{I}_{o}$ and $\hat{I}_{m}$ into the edited view $\hat{I}$.

Geometric Editing with Users’ Target Image

We enables users to edit the neural radiance field with a single image by leveraging geometric prior models, such as neural implicit shape representation or depth prediction.

Texture Editing with Users’ Target Image

We can transfer textures from a single image by assigning new textures on the car with Photoshop, or using a downloaded Internet image with different shapes as a reference. The texture style of edtied objects match to the target image with correct sematnic meaning.

Texture Editing with Text-prompts

we cooperate SINE with off-the-shelf text-prompts editing methods by using the single text-edited image as the target, which enables to change the object’s appearance in the 360◦ scene with vivid effects while preserving background unchanged.

SINE Hybrid Editing Examples

We can combine geometric and texture editing on the same object with users’ target images, e.g. the plush toy raises its hands and is painted in new textures from a yellow bear, and the airplane extends its wings and is painted golden.

@inproceedings{bao2023sine,

title={SINE: Semantic-driven Image-based NeRF Editing with Prior-guided Editing Field},

author={Bao, Chong and Zhang, Yinda and Yang, Bangbang and Fan, Tianxing and Yang, Zesong and Bao, Hujun and Zhang, Guofeng and Cui, Zhaopeng},

booktitle={The IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR)},

year={2023}

}