1SenseTime Research

2State Key Lab of CAD & CG, Zhejiang University

* denotes equal contribution and joint first authorship

+ denotes corresponding author

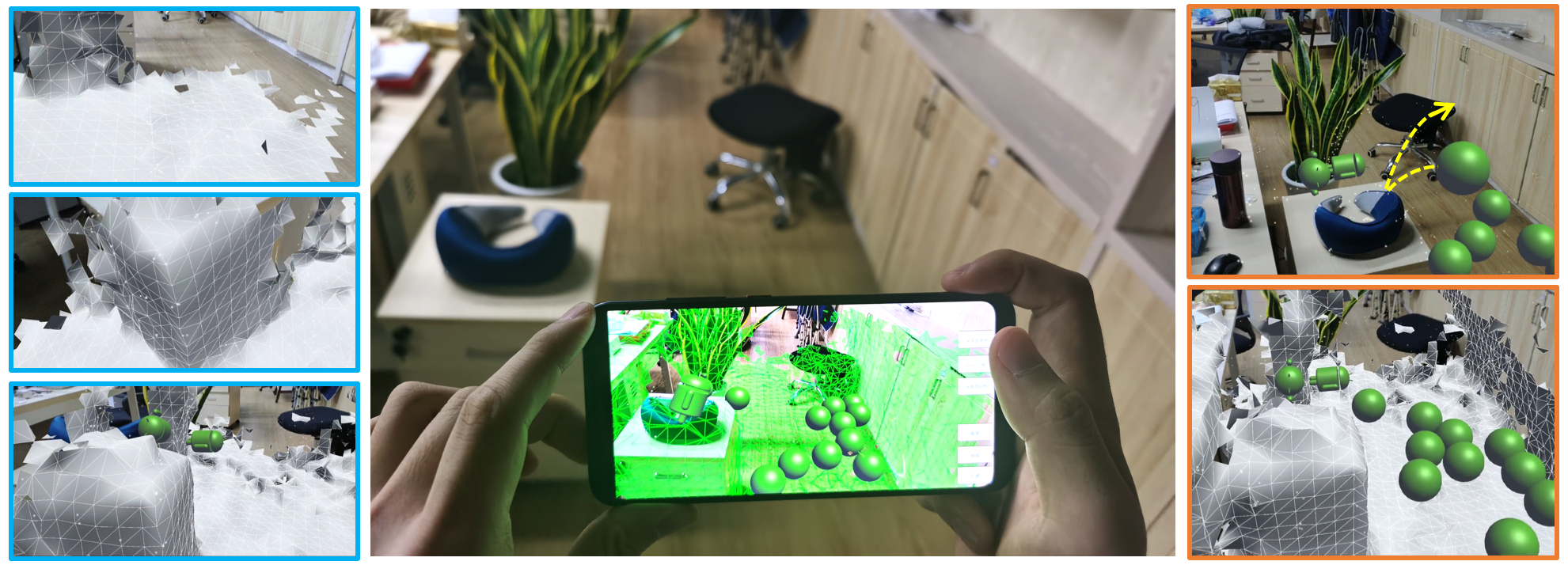

We present a real-time monocular 3D reconstruction system on a mobile phone, called Mobile3DRecon. Using an embedded monocular camera, our system provides an online mesh generation capability on back end together with real-time 6DoF pose tracking on front end for users to achieve realistic AR effects and interactions on mobile phones. Unlike most existing state-of-the-art systems which produce only point cloud based 3D models online or surface mesh offline, we propose a novel online incremental mesh generation approach to achieve fast online dense surface mesh reconstruction to satisfy the demand of real-time AR applications. For each keyframe of 6DoF tracking, we perform a robust monocular depth estimation, with a multi-view semi-global matching method followed by a depth refinement post-processing. The proposed mesh generation module incrementally fuses each estimated keyframe depth map to an online dense surface mesh, which is useful for achieving realistic AR effects such as occlusions and collisions. We verify our real-time reconstruction results on two mid-range mobile platforms. The experiments with quantitative and qualitative evaluation demonstrate the effectiveness of the proposed monocular 3D reconstruction system, which can handle the occlusions and collisions between virtual objects and real scenes to achieve realistic AR effects.

Our pipeline performs an incremental online mesh generation. 6DoF poses are tracked with a keyframe-based visual-inertial SLAM system, which maintains a keyframe pool on the back end with a global BA for keyframe pose refinement as feedback to the front end tracking. After the 6DoF tracking is initialized normally on the front end, for a latest incoming keyframe with its globally optimized pose, its dense depth map is online estimated by multi-view SGM, with a part of previous keyframes selected as reference frames. A convolutional neural network follows to refine depth noise. The refined key-frame depth map is then fused to generate dense surface mesh. High level AR applications can utilize this real-time dense mesh and the 6DoF SLAM poses to achieve realistic AR effects on the front end.

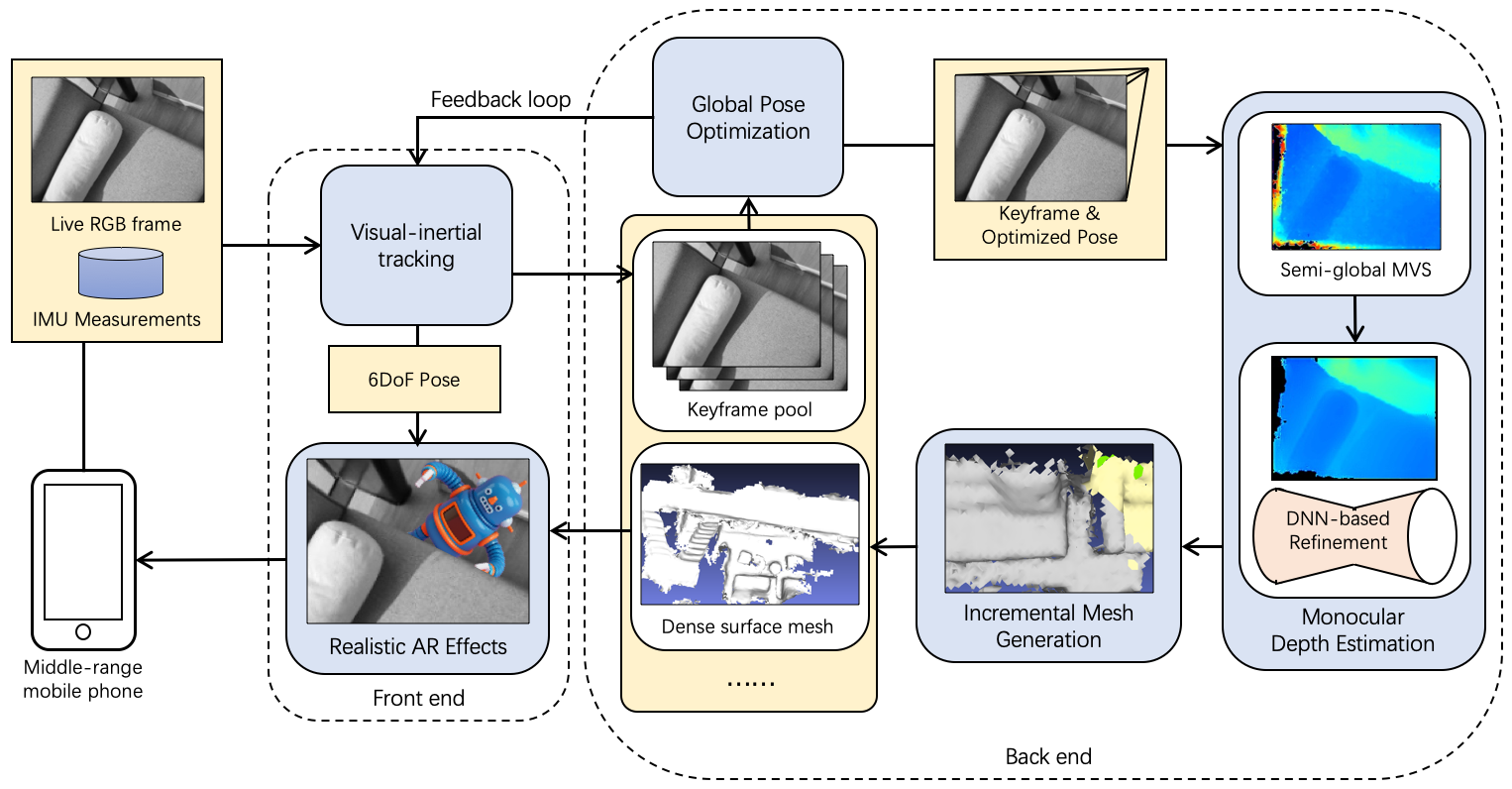

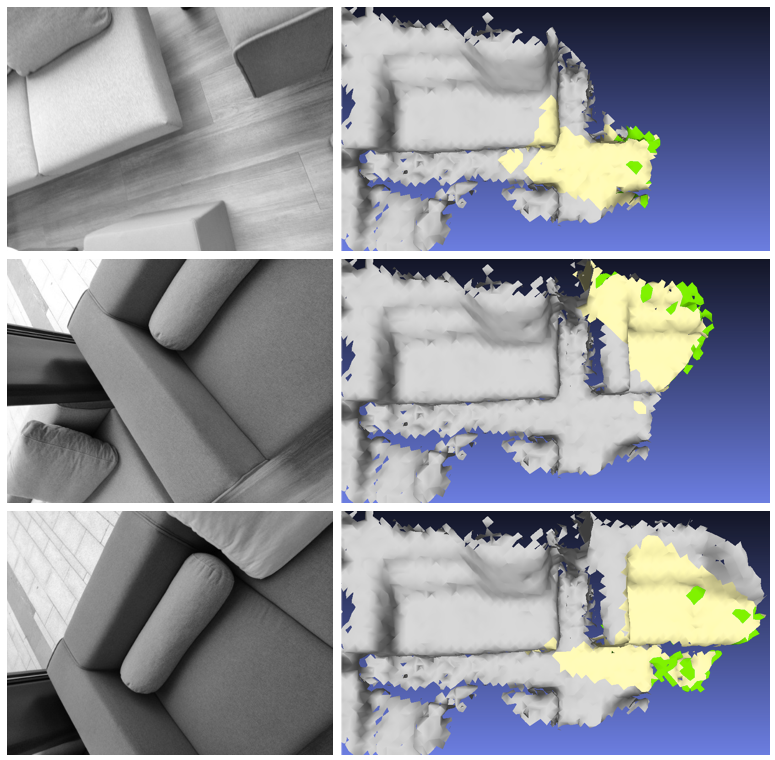

Our monocular depth estimation results on two representative keyframes from sequences “Sofa”. (a) The source keyframe image and its two selected reference keyframe images. (b) The depth estimation result of semi-global MVS and the corresponding point cloud by back-projection. (c) The result after confidence-based depth filtering and its corresponding point cloud. (d) The final depth estimation result after DNN-based refinement with its corresponding point cloud.

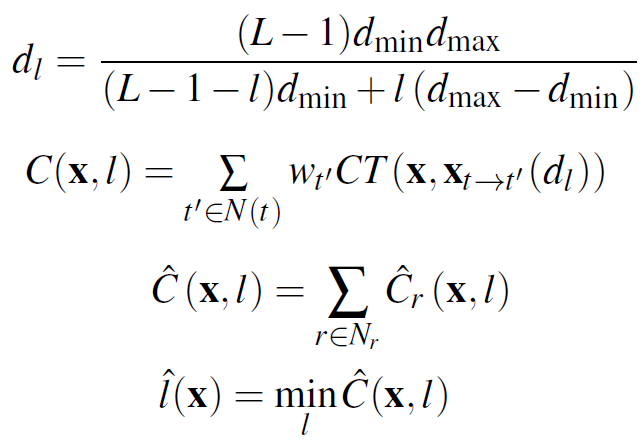

We estimate depth map using an SGM based multi-view stereo approach, which is carried out in a uniformly sampled inverse depth space.

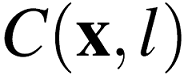

We use weighted Census Transform to compute patch similarity cost  , with scores of neighboring frames as weights.

The aggregated cost

, with scores of neighboring frames as weights.

The aggregated cost  among multiple keyframes can be accelerated by NEON, with Winner-Take-All strategy to get the initial depth map. The initial depth map is then refined in sub-level by parabola fitting.

among multiple keyframes can be accelerated by NEON, with Winner-Take-All strategy to get the initial depth map. The initial depth map is then refined in sub-level by parabola fitting.

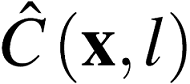

After the depth filtering, we employ a deep neural network to refine the remaining depth noise. Our network is a two-stage refinement structure. The first stage is an image-guided sub-network, which combines filtered depth with the corresponding gray image to reason a coarse refinement result. The second stage is a residual U-Net which further refines the previous coarse result to get the final refined depth.

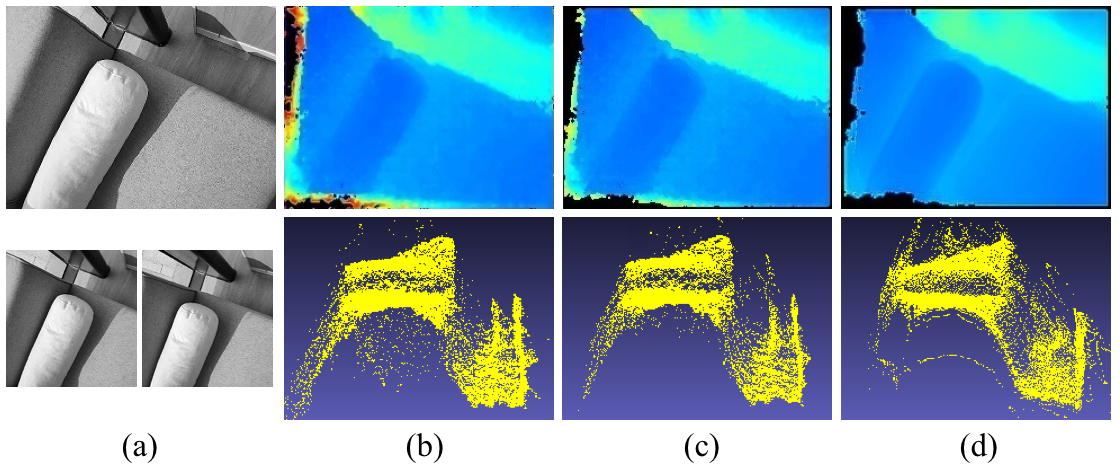

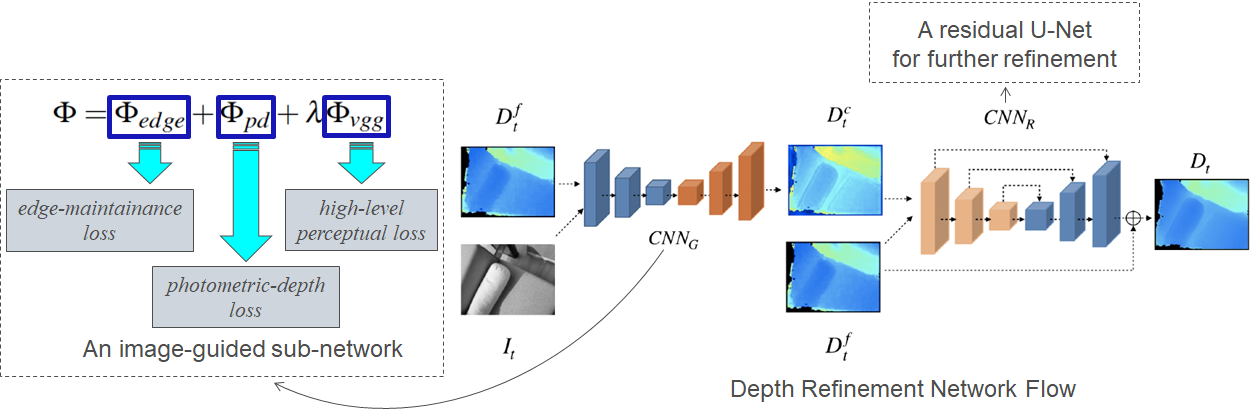

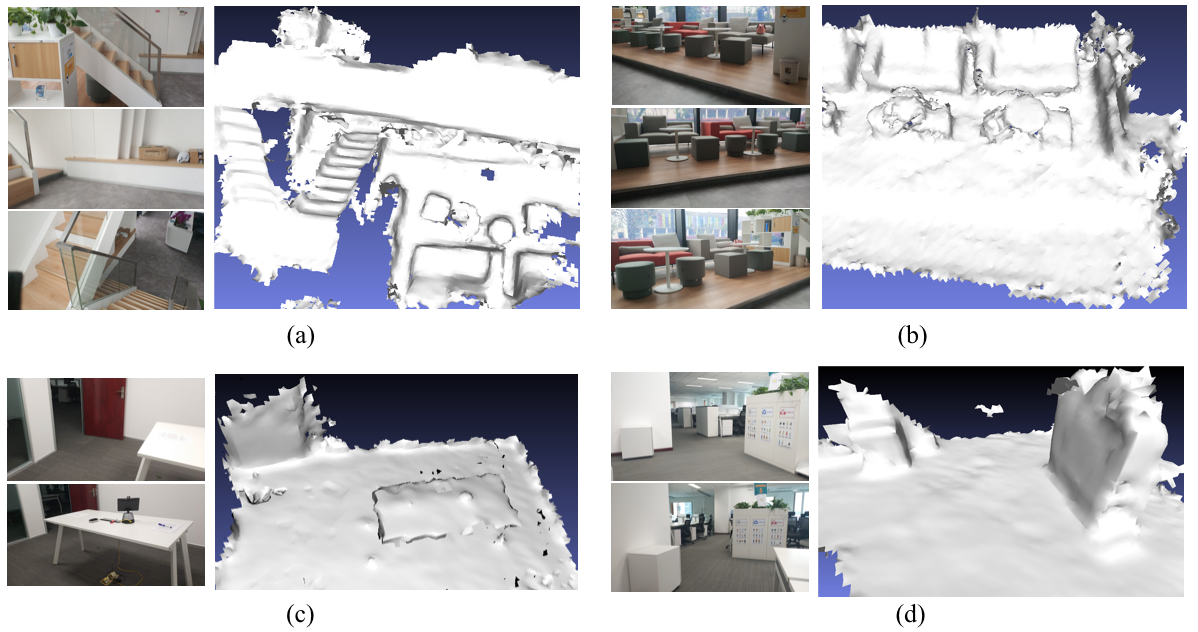

Surface mesh generation results of our four experimental sequences (a)"Indoor stairs", (b)"Sofa", (c)"Desktop" and (d)"Cabinet" captured by OPPO R17 Pro. (a) shows some representative keyframes of each sequence.

We present a novel incremental mesh generation approach which can update surface mesh in real-time and is more suitable for AR applications on mobile platform with limited computing resources.

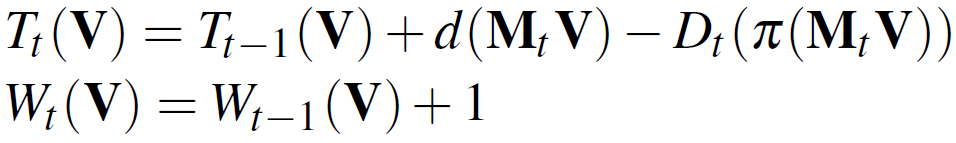

Each estimated depth map is integrated into the TSDF voxels, with associated voxels generated / updated in the conventional way.

To handle the influence of dynamic objects,we project existing voxels to the current frame for depth visibility checking.

We use an incremental marching cubes algorithm to maintain a status variable for each voxel indicating whether it is newly added or updated or not. For each keyframe, we only need to extract or update mesh triangles from the newly added and updated cubes.

Comparison of the finally fused surface meshes by fusing the estimated depth maps of our Mobile3DRecon and some state-of-the-art methods on sequence “Outdoor stairs” by OPPO R17 Pro. (a) Some representative keyframes. (b) Surface mesh generated by fusing ToF depth maps. (c) DPSNet. (d) MVDepthNet. (e) Ours Mobile3DRecon.

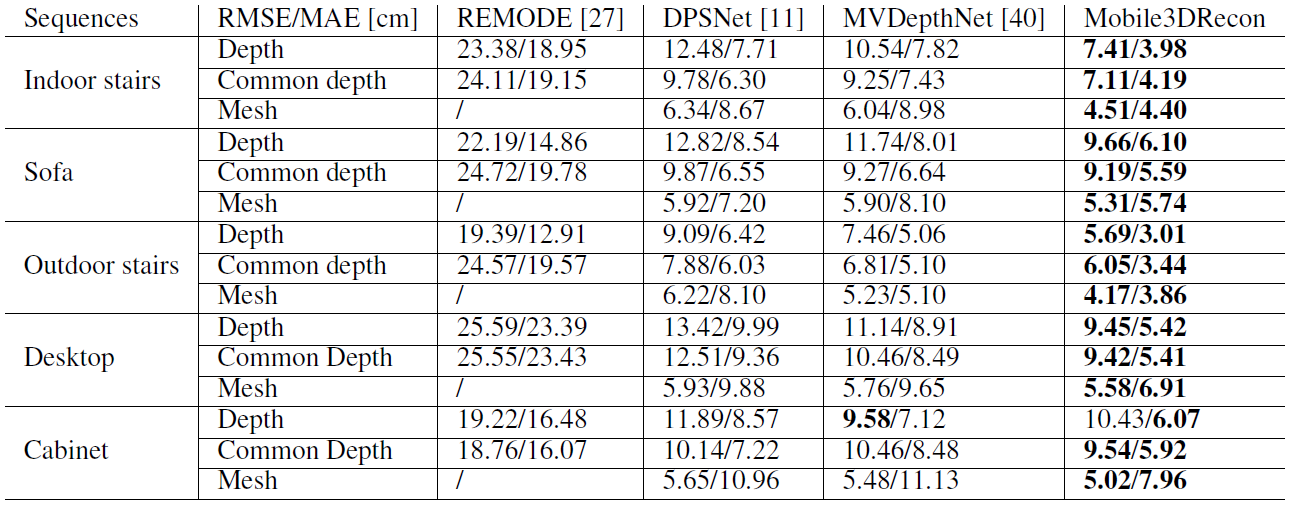

The RMSEs and MAEs of the depth and surface mesh results on our five experimental sequences captured by OPPO R17 Pro with ToF depth measurements as GT. For depth evaluation, only the pixels with valid depths in both GT and the estimated depth map will participate in error calculation. For common depth evaluation, only the pixels with common valid depths in all the methods and GT will participate in evaluation.

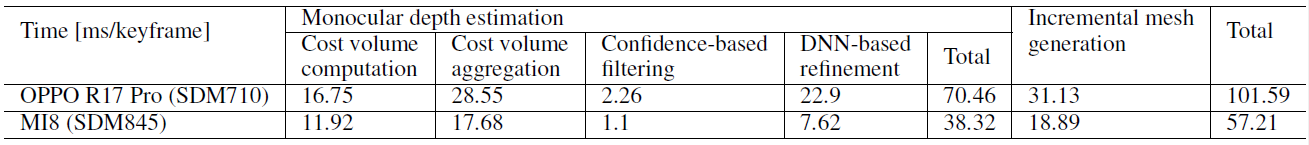

The detailed per-keyframe time consumptions (in milliseconds) of our Mobile3DRecon in all the substeps. The time statistics are given on two mobile platforms: OPPO R17 Pro with SDM710 and MI8 with SDM845.

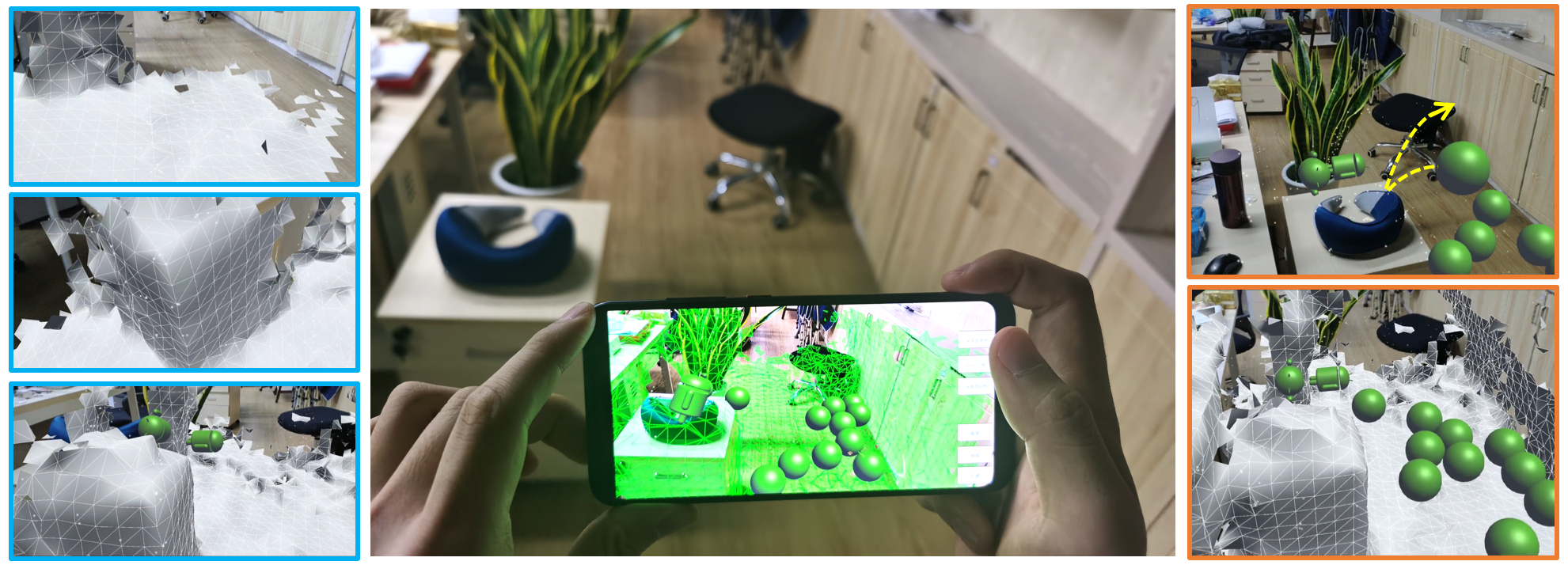

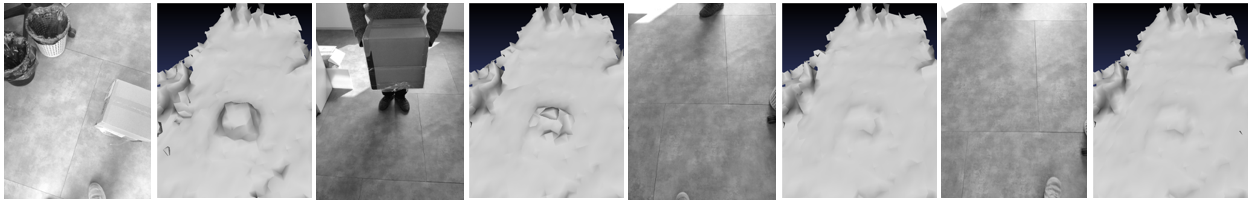

AR applications of Mobile3DRecon on mobile platforms: The first row shows the 3D reconstruction and an occlusion effect of an indoor scene on OPPO R17 Pro. The second and third rows illustrate AR occlusion and collision effects of another two scenes on MI8.

@article{yang2020mobile3drecon,

author={Yang, Xingbin and Zhou, Liyang and Jiang, Hanqing and Tang, Zhongliang and Wang, Yuanbo and Bao, Hujun and Zhang, Guofeng},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={{Mobile3DRecon}: Real-time Monocular {3D} Reconstruction on a Mobile Phone},

year={2020},

volume={26},

number={12},

pages={3446-3456}

}

We would like to thank Feng Pan and Li Zhou for their kind help in the development of the mobile reconstruction system and the experimental evaluation. This work was partially supported by NSF of China (Nos. 61672457 and 61822310), and the Fundamental Research Funds for the Central Universities (No. 2019XZZX004-09).