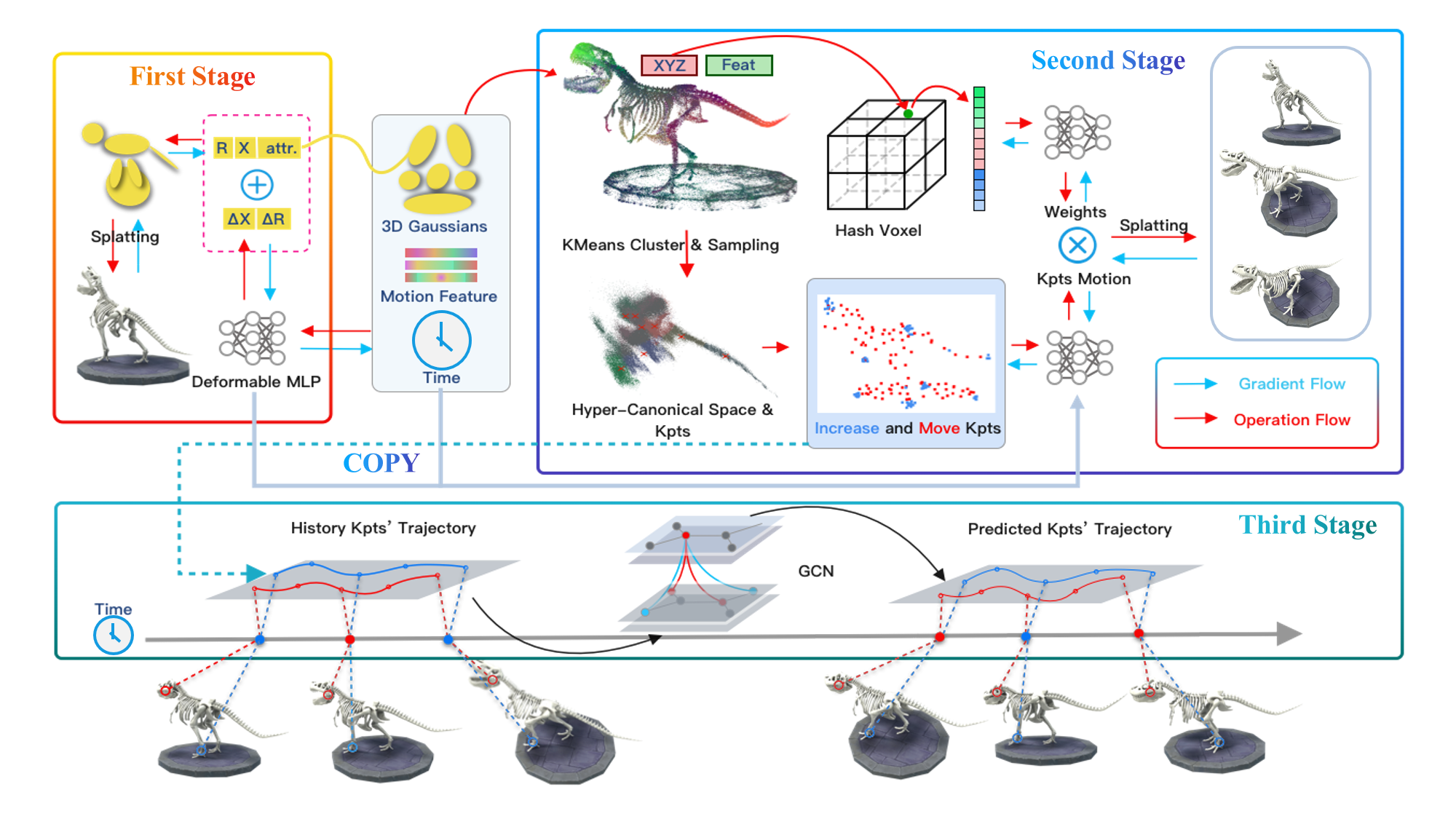

Forecasting future scenarios in dynamic environments is essential for intelligent decision-making and navigation, a challenge yet to be fully realized in computer vision and robotics. Traditional approaches like video prediction and novel-view synthesis either lack the ability to forecast from arbitrary viewpoints or to predict temporal dynamics. In this paper, we introduce GaussianPrediction, a novel framework that empowers 3D Gaussian representations with dynamic scene modeling and future scenario synthesis in dynamic environments. GaussianPrediction can forecast future states from any viewpoint, using video observations of dynamic scenes. To this end, we first propose a 3D Gaussian canonical space with deformation modeling to capture the appearance and geometry of dynamic scenes, and integrate the lifecycle property into Gaussians for irreversible deformations. To make the prediction feasible and efficient, a concentric motion distillation approach is developed by distilling the scene motion with key points. Finally, a Graph Convolutional Network is employed to predict the motions of key points, enabling the rendering of photorealistic images of future scenarios. Our framework shows outstanding performance on both synthetic and real-world datasets, demonstrating its efficacy in predicting

Optimization starts with the initial 3D Gaussians. We then optimize the parameters of the 3D Gaussians, motion feature, and deformable MLP to build a Hyper-Canonical space. Next in the second stage, we first initialize the key points in the Hyper-Canonical space by a K-Means algorithm. Then we learn the time-independent weights for each Gaussian and deform the 3D Gaussian by key points motion. We employ a GCN (Graph Convolutional Network) to learn the relationships between key points, thereby predicting the future motion of key points, and rendering future scenes from a novel view.

@article{2405.19745,

Author = {Boming Zhao and Yuan Li and Ziyu Sun and Lin Zeng and Yujun Shen and Rui Ma and Yinda Zhang and Hujun Bao and Zhaopeng Cui},

Title = {GaussianPrediction: Dynamic 3D Gaussian Prediction for Motion Extrapolation and Free View Synthesis},

Year = {2024},

Eprint = {arXiv:2405.19745},

Doi = {10.1145/3641519.3657417},

}