Collaborative Neural Point-based SLAM System

NeurIPS 2023

Abstract

This paper presents a collaborative implicit neural simultaneous localization and mapping (SLAM) system with RGB-D image sequences, which consists of complete front-end and back-end modules including odometry, loop detection, sub-map fusion, and global refinement. In order to enable all these modules in a unified framework, we propose a novel neural point based 3D scene representation in which each point maintains a learnable neural feature for scene encoding and is associated with a certain keyframe. Moreover, a distributed-to-centralized learning strategy is proposed for the collaborative implicit SLAM to improve consistency and cooperation. A novel global optimization framework is also proposed to improve the system accuracy like traditional bundle adjustment. Experiments on various datasets demonstrate the superiority of the proposed method in both camera tracking and mapping.

Video

YouTube Source

System Overview

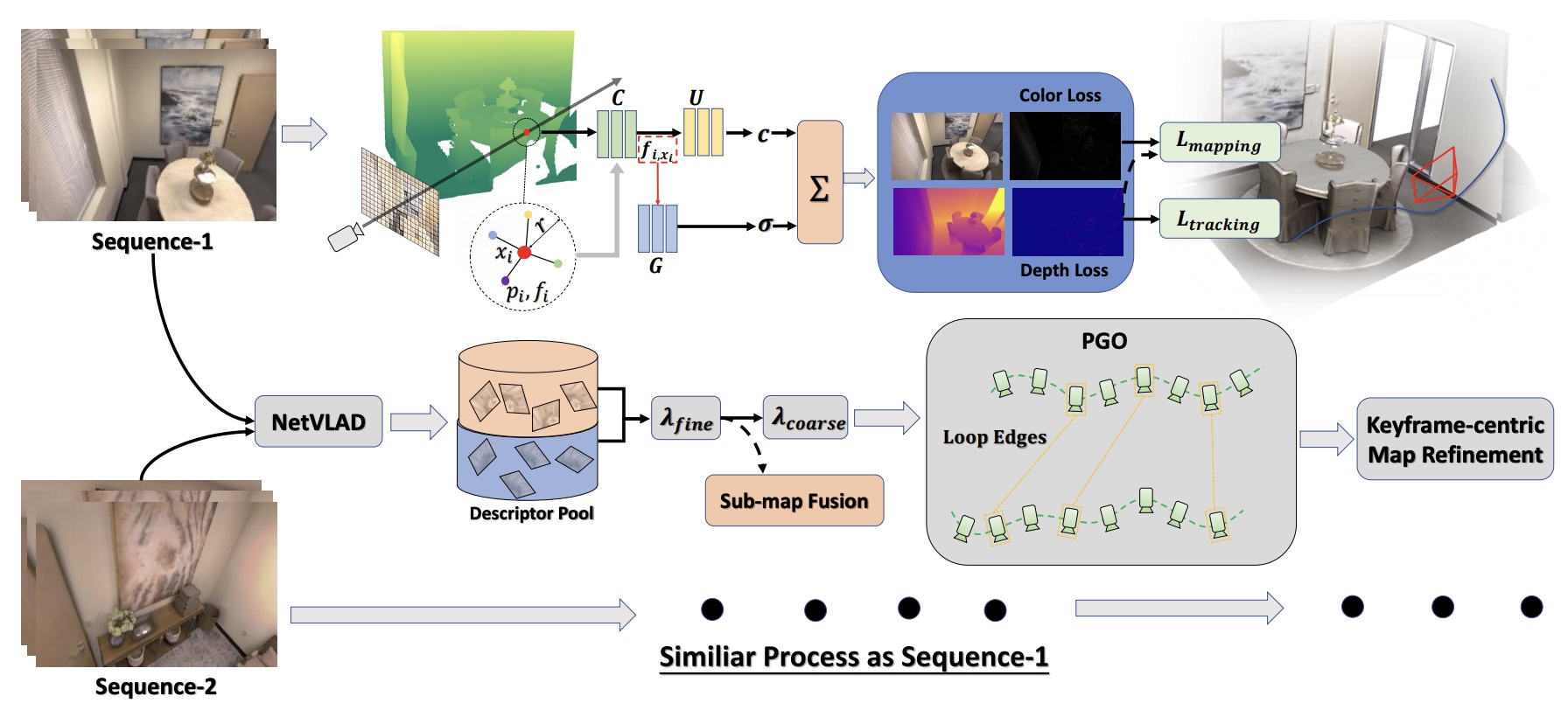

Our system takes single or multi RGB-D streams as input and performs tracking and mapping as follows. From left to right, we conduct differentiable ray marching in a neural point field to predict depth and color. To obtain feature embedding of a sample point along a ray, we interpolate neighbor features within a sphere with radius $\boldsymbol{r}$. MLPs decode these feature embeddings into meaningful density and radiance for volume rendering. By computing rendering difference loss, camera motion and neural field can be optimized. While tracking and mapping, a single agent continuously sends keyframe descriptors encoded by NetVLAD to the descriptor pool. The central server will fuse sub-maps and perform global pose graph optimization(PGO) based on matching pairs to deepen collaboration. Finally, our system ends the workflow with keyframe-centric map refinement.

Multi-agent Collaboration

In three-agent collaboration, our proposed system processes three image sequences simultaneously for tracking and mapping in an large-scale indoor scene. Benefiting from multi-agent collaboration, CP-SLAM can perform scene exploration in a shorter time and produce a more complete mesh map.

This figure depicts the trajectories of each agent. Once two sub-graphs are fused, only a low-cost fine-tuning is required to adjust two neural fields and corresponding MLPs into a shared domain. Afterward, these two agents can reuse each other’s previous observations and continue accurate tracking. Shared MLPs, neural fields, and following global pose graph optimization make CP-SLAM system a tightly collaborative system. It can be seen that CCM-SLAM failed in some scenes because traditional RGB-based methods are prone to feature mismatching especially in textureless environments. In contrast, our collaborative NeRF-SLAM system has a robust performance.

Single-agent Exploration

As a collaborative NeRF-SLAM system, CP-SLAM is naturally able to support single-agent mode with loop detection and pose graph optimization.

Qualitatively, we present trajectories of Room-0-loop and Office-3-loop from NeRF-based methods in the above figure. Our method exhibits a notable superiority over recent methods, primarily attributed to the integration of concurrent front-end and back-end processing, as well as the incorporation of neural point representation. In comparison with frequent jitters in the trajectories of NICE-SLAM and Vox-Fusion, our trajectory is much smoother.

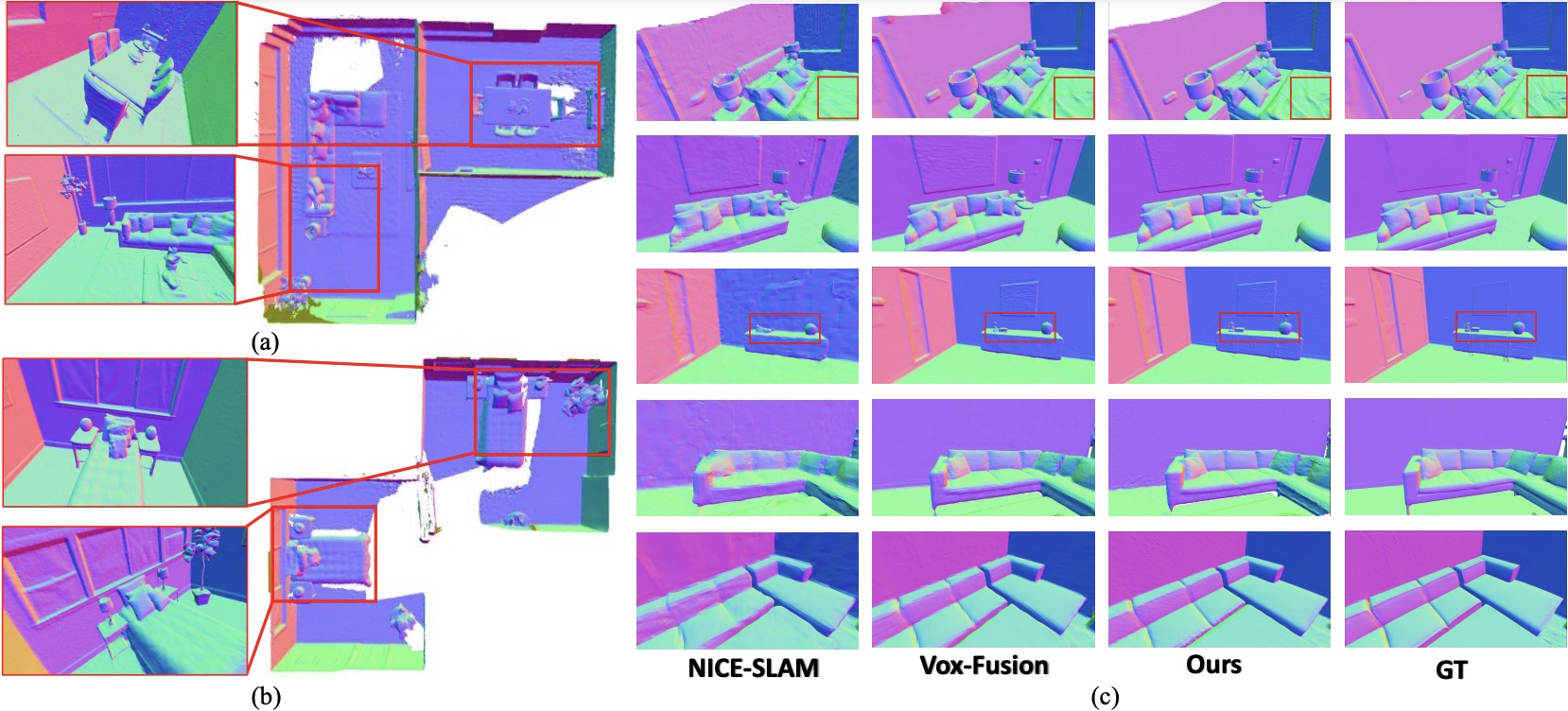

Reconstruction

Collaborative reconstruction results of Apartment-1 (a) and Apartment-0 (b) show good consistency. (c) It can be seen that our system also achieves more detailed geometry reconstruction in four single-agent datasets, e.g., note folds of the quilt and the kettle in red boxes. Holes in the mesh reconstruction indicates unseen area in our datasets.

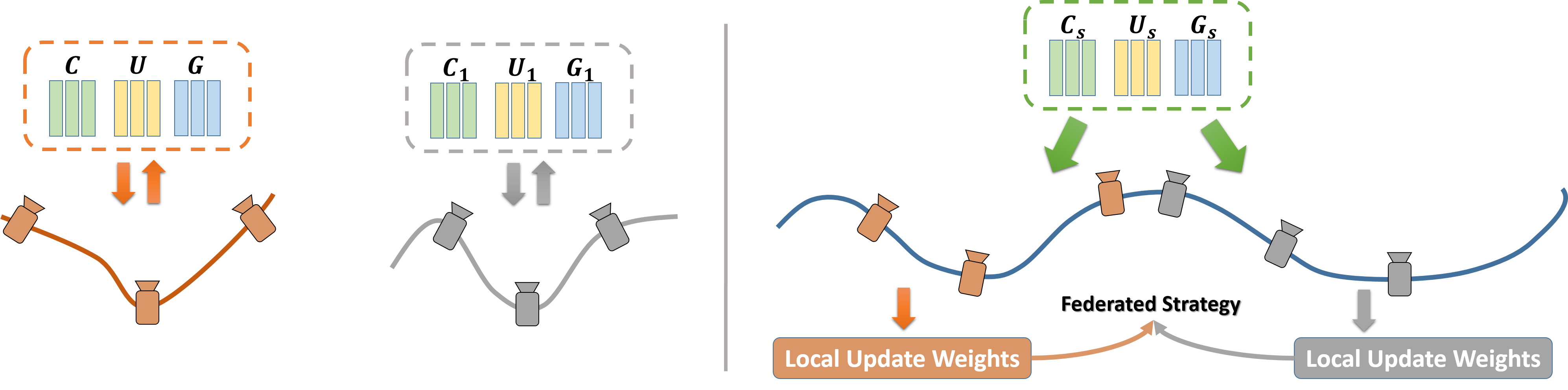

Distributed-to-Centralized Learning

To enhance consistency and cooperation, in collaborative SLAM, we adopt a two-stage MLP training strategy. At the first stage (Distributed Stage), each image sequence is considered as a discrete individual with a unique group of MLPs $\boldsymbol{{Cj , Uj , Gj}}$ for sequential tracking and mapping. After loop detection and sub-map fusion, we expect to share common MLPs across all sequences (Centralized Stage). To this end, we introduce the Federated learning mechanism which trains a single network in a cooperating shared way. At the same time as sub-map fusion, we average each group of MLPs and fine-tune the averaged MLPs on all keyframes to unify discrete domains. Subsequently, we iteratively transfer sharing MLPs to each agent for local training and average the local weights as the final optimization result of sharing MLPs, as shown in the above figure.

BibTeX

@inproceedings{cp-slam,

title={CP-SLAM: Collaborative Neural Point-based SLAM System},

author={Jiarui Hu and Mao Mao and Hujun Bao and Guofeng Zhang and Zhaopeng Cui},

booktitle={Neural Information Processing Systems (NeurIPS)},

year={2023}

}